티스토리 뷰

Phillip Isola

1. Introduction

Image-to-Image Translation은 이미지를 입력으로 받아서 또 다른 이미지를 출력으로 반환하는 Task를 뜻한다.

본 논문은 Image-to-Image Translation에 적합한 cGAN을 기반으로하며 다양한 Task에서 좋은 결과를 보이는 프레임워크 pix2pix를 다룬다. 자동 언어 번역이 가능한 것처럼 자동 image-to-image 변환 또한 충분한 학습 데이터가 주어진다면 한 장면의 표현을 다른 장면으로 변환하는 작업으로 정의할 수 있다.

DCGAN과 다른점은 Generator(G)의 input이 random vector가 아니라 condition input 라는 점이다.

본 논문에서 우리의 목표는 이러한 모든 문제에 대한 공통된 프레임워크를 개발하는 것이다. -> 다양한 과제 해결가능!

이미지 예측에서 사용되는 CNN에서 L1, L2와 같은 유클리디안 거리를 사용한 loss는 흐릿한 결과를 생성하나, GAN loss를 함께 사용한다면 선명한 이미지를 생성할 수 있게 된다.

2. Related work

Structed losses for image modeling, Conditional GANs 관련 내용

* 본 논문의 연구는 특정 목적이 없는 것이 특징임.

3. Method

GANs는 랜덤 노이즈 벡터 를 출력 이미지 로 매핑하는 매핑 (을 수행하는 모델이다. 대조적으로 조건부 GANs는 조건에 해당하는 이미지 와 랜덤 노이즈 벡터 를 출력 이미지 로 매핑하는 매핑 (을 학습해 수행한다.

위 그림에서는 (edge)를 조건으로 받아 실제 이미지를 생성하고, 판별자 또한 이 (edge)와 생성 이미지를 받아 판별하면서 모델을 발전시키게 된다.

3.1 Objective

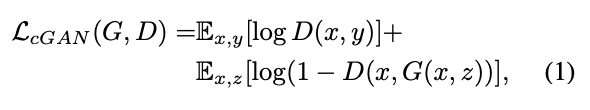

GAN의 objective loss는 다음과 같다.

Conditional GAN은 기존 GAN에서 condition x만 추가된 것.

원래 cGAN에서는 latent vector z에 컨디션 벡터 z를 가하는 방식이었는데,

여기서 컨디션벡터 대신에 x를 입력 영상으로 본다. : 위의 식

Pix2Pix는 {변환할 영상 x, 변환 결과 영상 y} 를 쌍으로 사용하기 때문에, 위 식과 같은 목표함수가 도출됨.

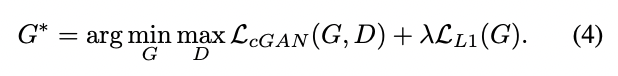

"Context Encoder" 논문의 결과를 받아들임 -> loss 함수를 설계할 때 traditional loss((ex: L2 loss))를 GAN loss와 섞어 사용하는 것이 효과적이라는 것.

실험 결과 L1 loss와 L2 loss 중 L1이 이미지의 blur함이 덜 해 L1 loss를 사용하기로 결정.

L1+cCAN이 젤 효과적인 것을 확인할 수 있다.

Random noise Z 사용 X?

G가 z를 무시하도록 학습이 되기 때문에, random noise Z를 사용하지 않는다.

Z가 없기 때문에 mapping 과정에서 stochastic((무작위))한 결과를 내지 못하고 deterministic한 결과를 내보내는 문제가 있지만, 논문에서는이를 future work로써 남겨두었다.

대신 조금의 무작위성이 생기도록 layer에 dropout을 적용하였다.

3.2 Network architectures

3.2.1 Generator with skips

Generator : U-Net 기반

encoder-decoder 구조에서 영상 크기를 줄였다가 다시 키우는 과정에서 detail이 사라지면서 영상이 blur해지는 문제를 피하기 위해, 오른쪽처럼 다시 skip connection을 가진다.

U-Net이란 이름이 붙은 이유는 위와 같이 U자 형태로 표현할 수 있기 때문.

레이어가 깊어질 수록 세밀한 정보는 어디선가 받지 않는 이상 남지 않음. (skip connection 역할)

더 좋은 성능을 보임!

3.2.2 Markovian discriminator (PatchGAN)

Disciminator: PatchGAN 사용

L1 Loss의 특징: Low-frequency 성분을 잘 검출 함.

* Frequency : 이미지에서 픽셀 변화의 정도

- low-frequency : 사물에 대해서는 사물 내에서는 색 변화가 크지 않음.

- high-frequency : 사물 경계( : edge)에서는 색이 급격하게 변함.

즉, L1 loss를 사용할 경우 blurry하지만 low-frequency 성분들을 잘 검출해내므로 이는 그대로 두고 Discriminator에서 high-frequency의 검출을 위한 모델링을 진행하게 된다.

=> 이미지 전체가 필요없고, local image patch 를 사용해 high-frequency를 판별해도 괜찮음.

PatchGAN:

전체 영역이 아니라, 특정 크기의 patch 단위로 진짜/가짜를 판별하고, 그 결과에 평균을 취하는 방식.

patch 크기에 따른 실험을 진행함.

70x70 일 때, 가장 효과적인 결과가 나오는 것을 확인.

=> 전체 이미지에 대한 판별보다, 적절한 크기의 patch를 정하고 그 patch들이 대부분 진짜인 쪽으로 결과가 나오도록 학습을 진행하는 것이 효율적이다.

3.3 Optimization and inference

특이사항 몇 가지.

- train:

1. G를 학습할 때, log(x,G(x,z)) 를 최대한 학습하도록 함.

2. D를 학습할 때, loss를 2로 나눠서 학습. D를 G보다 학습 속도를 느리게하기 위해서이다.

- test:

1. dropout을 쓴다.

2. batch normalization은 test batch의 statistics를 사용함.

4. Experiments

4.1 Evaluation metrics

결과를 전체적으로 평가하기 위해 2가지 전략을 사용.

1. Amazon Mechanical Turk(AMT)로 ‘real vs fake’에 대해 사람이 평가하는 인식테스트.

사람이 주어진 이미지를 보고 진짜인지 가짜인지 판단하는 실험, 생성된 이미지가 얼마나 많은 사람들에게 진짜 이미지처럼 보여 사람들을 속일 수 있었는지에 대한 퍼센트를 통해 분석.

2. ‘FCN-score’로 기존의 분류 모델을 합성된 이미지 안의 물체를 인식할 수 있을 정도로 합성된 이미지가 현실적인지 여부를 측정하는 방법.

기존의 semantic segmentation 모델을 사용해 생성 이미지 내의 object들을 얼마나 정확하게 클래스 별로 픽셀에 나타내는 지를 실험,생성된 이미지과 현실의 이미지와 유사할 수록 segmentation 모델 또한 더 정확하게 이미지 내의 object 들을 segmentation 할 수 있을 것이라는 아이디어로 segmentation 결과의 pixel, class 별 정확도 등을 분석.

4.4 From PixelGANs to PatchGANs to ImageGANs

지도 -> 항공사진 / 항공사진 -> 지도

영상에 대한 label 달아주는 semantic segmentation 수행

=> L1이 정확도가 가장 높은 방법

5. Conclusion

본 논문의 결과는 조건부 adversarial network가 많은 image-to-image 변환 작업,

특히 구조화된 그래픽 출력을 포함하는 작업에 유망한 접근 방식임.

입력이 비정상적이거나 입력 이미지 내의 일부 영역이 많이 비어있어 어떤 정보를 나타내는 지 모델이 알기 어려울 때 실패하는 경우가 많다.

6. Appendix

네트워크의 구조와 학습에 사용한 파라미터들 나와있음.

구현

Model

Network Implementation

1. CBR2D : Convolution-BatchNormalization-ReLU

2. DECBR2D : ConvolutionTranspose-BatchNormalization-ReLU (decoder를 위한)

Generator

Encoder)

C64-C128-C256-C512-C512-C512-C512-C512

Decoder)

CD512-CD1024-CD1024-C1024-C1024-C512-C256-C128

(이 경우 U-Net의 형태로 i번째 layer 집합체와 8-i번째 layer 집합체간의 skip connection이 존재)

class Pix2Pix(nn.Module):

def __init__(self, in_channels=3, out_channels, nker=64, norm="bnorm"):

super(Pix2Pix, self).__init__()

self.enc1 = CBR2d(in_channels, 1 * nker, kernel_size=4, padding=1,

norm=None, relu=0.2, stride=2)

self.enc2 = CBR2d(1 * nker, 2 * nker, kernel_size=4, padding=1,

norm=norm, relu=0.2, stride=2)

self.enc3 = CBR2d(2 * nker, 4 * nker, kernel_size=4, padding=1,

norm=norm, relu=0.2, stride=2)

self.enc4 = CBR2d(4 * nker, 8 * nker, kernel_size=4, padding=1,

norm=norm, relu=0.2, stride=2)

self.enc5 = CBR2d(8 * nker, 8 * nker, kernel_size=4, padding=1,

norm=norm, relu=0.2, stride=2)

self.enc6 = CBR2d(8 * nker, 8 * nker, kernel_size=4, padding=1,

norm=norm, relu=0.2, stride=2)

self.enc7 = CBR2d(8 * nker, 8 * nker, kernel_size=4, padding=1,

norm=norm, relu=0.2, stride=2)

self.enc8 = CBR2d(8 * nker, 8 * nker, kernel_size=4, padding=1,

norm=norm, relu=0.2, stride=2)

self.dec1 = DECBR2d(8 * nker, 8 * nker, kernel_size=4, padding=1,

norm=norm, relu=0.0, stride=2)

self.drop1 = nn.Dropout2d(0.5)

self.dec2 = DECBR2d(2 * 8 * nker, 8 * nker, kernel_size=4, padding=1,

norm=norm, relu=0.0, stride=2)

self.drop2 = nn.Dropout2d(0.5)

self.dec3 = DECBR2d(2 * 8 * nker, 8 * nker, kernel_size=4, padding=1,

norm=norm, relu=0.0, stride=2)

self.drop3 = nn.Dropout2d(0.5)

self.dec4 = DECBR2d(2 * 8 * nker, 8 * nker, kernel_size=4, padding=1,

norm=norm, relu=0.0, stride=2)

self.dec5 = DECBR2d(2 * 8 * nker, 4 * nker, kernel_size=4, padding=1,

norm=norm, relu=0.0, stride=2)

self.dec6 = DECBR2d(2 * 4 * nker, 2 * nker, kernel_size=4, padding=1,

norm=norm, relu=0.0, stride=2)

self.dec7 = DECBR2d(2 * 2 * nker, 1 * nker, kernel_size=4, padding=1,

norm=norm, relu=0.0, stride=2)

self.dec8 = DECBR2d(2 * 1 * nker, out_channels, kernel_size=4, padding=1,

norm=None, relu=None, stride=2)

def forward(self, x):

enc1 = self.enc1(x)

enc2 = self.enc2(enc1)

enc3 = self.enc3(enc2)

enc4 = self.enc4(enc3)

enc5 = self.enc5(enc4)

enc6 = self.enc6(enc5)

enc7 = self.enc7(enc6)

enc8 = self.enc8(enc7)

dec1 = self.dec1(enc8)

drop1 = self.drop1(dec1)

cat2 = torch.cat((drop1, enc7), dim=1)

dec2 = self.dec2(cat2)

drop2 = self.drop2(dec2)

cat3 = torch.cat((drop2, enc6), dim=1)

dec3 = self.dec3(cat3)

drop3 = self.drop3(dec3)

cat4 = torch.cat((drop3, enc5), dim=1)

dec4 = self.dec4(cat4)

cat5 = torch.cat((dec4, enc4), dim=1)

dec5 = self.dec5(cat5)

cat6 = torch.cat((dec5, enc3), dim=1)

dec6 = self.dec6(cat6)

cat7 = torch.cat((dec6, enc2), dim=1)

dec7 = self.dec7(cat7)

cat8 = torch.cat((dec7, enc1), dim=1)

dec8 = self.dec8(cat8)

x = torch.tanh(dec8)

return x- input_channel 수가 3이기 때문에 default를 3으로 진행

- pix2pix에서 encoder의 첫 형태가 C64이고 2배씩 늘어나는 형태이기 때문에 default nker를 64로 설정하고 2배씩 늘려주는 형태로 layer들을 구현

- size를 1/2씩으로 줄이기 위해 padding으로 1을 사용

- decoder의 3번째 layer 집합체까지 dropout(0.5)

- forward()에서는 앞서 정의한 encoder와 decoder를 순차적으로 진행

: skip connection을 구현하기 위해서 torch.cat을 이용 -> i 번째와 8-i 번째의 layer 집합체의 결과를 concatenate

Discriminator

C64-C128-C256-C512의 구조

class Discriminator(nn.Module):

def __init__(self, in_channels, out_channels, nker=64, norm="bnorm"):

super(Discriminator, self).__init__()

self.enc1 = CBR2d(1 * in_channels, 1 * nker, kernel_size=4, stride=2,

padding=1, norm=None, relu=0.2, bias=False)

self.enc2 = CBR2d(1 * nker, 2 * nker, kernel_size=4, stride=2,

padding=1, norm=norm, relu=0.2, bias=False)

self.enc3 = CBR2d(2 * nker, 4 * nker, kernel_size=4, stride=2,

padding=1, norm=norm, relu=0.2, bias=False)

self.enc4 = CBR2d(4 * nker, out_channels, kernel_size=4, stride=2,

padding=1, norm=norm, relu=0.2, bias=False)

def forward(self, x):

x = self.enc1(x)

x = self.enc2(x)

x = self.enc3(x)

x = self.enc4(x)

x = torch.sigmoid(x)

return x- 마지막 layer의 끝에 sigmoid가 등장

- leakyReLU 0.2

Train

# train 진행하기

for epoch in range(st_epoch + 1, num_epoch + 1):

netG.train()

netD.train()

generatorL1Loss = []

generatorGanLoss = []

discriminatorRealLoss = []

discriminatorFakeLoss = []

for batch, data in enumerate(dataLoader, 1):

# forward netG

label = data['label'].to(device)

input = data['input'].to(device)

output = netG(input)

# backward netD

set_requires_grad(netD, True)

optimD.zero_grad()

real = torch.cat([input, label], dim=1)

fake = torch.cat([input, output], dim=1)

predictReal = netD(real)

predictFake = netD(fake.detach())

realLossD = ganLoss(predictReal, torch.ones_like(predictReal))

fakeLossD = ganLoss(predictFake, torch.zeros_like(predictFake))

discriminatorLoss = 0.5 * (realLossD + fakeLossD)

discriminatorLoss.backward()

optimD.step()

# backward netG

set_requires_grad(netD, False)

optimG.zero_grad()

fake = torch.cat([input, output], dim=1)

predictFake = netD(fake)

ganLossG = ganLoss(predictFake, torch.ones_like(predictFake))

l1LossG = l1Loss(output, label)

generatorLoss = ganLossG + wgt * l1LossG

generatorLoss.backward()

optimG.step()

# loss 계산

generatorL1Loss += [l1LossG.item()]

generatorGanLoss += [ganLossG.item()]

discriminatorRealLoss += [realLossD.item()]

discriminatorFakeLoss += [fakeLossD.item()]

# 매 batch 마다의 loss 출력

print("TRAIN: EPOCH %04d / %04d | BATCH %04d / %04d | "

"GENERATOR L1 LOSS %.4f | GENERATOR GAN LOSS %.4f | "

"DISCRIMINATOR REAL LOSS: %.4f | DISCRIMINATOR FAKE LOSS: %.4f" %

(epoch, num_epoch, batch, num_batch_train,

np.mean(loss_G_l1_train), np.mean(loss_G_gan_train),

np.mean(loss_D_real_train), np.mean(loss_D_fake_train)))- mini-batch를 이용

- generator를 이용해 이미지를 생성해 내고, netD는 그 이미지를 이용해 진위여부를 수행한 뒤 back propagation을 진행하여 gradient descent 방향으로 학습하고, 이후에 netG가 back propagation을 진행하여 gradient descent 방향으로 학습이 진행

- backward netD에서만 fake.detach()를 해준 이유는 discriminator를 back propagtion 할 때 원하지 않는 generator의 back propagation을 막기 위함

레퍼런스

: https://medium.com/humanscape-tech/ml-practice-pix2pix-1-d89d1a011dbf

[ML Practice] pix2pix(1)

휴먼스케이프 Software engineer Covy입니다.

medium.com

'AI > 논문 리뷰 Paper Review' 카테고리의 다른 글

| NeRF | 논문 리뷰 (0) | 2024.05.25 |

|---|---|

| DreamBooth: Fine Tuning Text-to-Image Diffusion Models for Subject-Driven Generation (0) | 2024.05.21 |

| Wasserstein GAN : arXiv 2017 | 논문 리뷰 (0) | 2024.03.03 |

| DCGAN : ICLR 2016 (0) | 2024.01.28 |

| Generative Adversarial Nets : arXive 2014 (0) | 2024.01.15 |

- Total

- Today

- Yesterday

- Aimers

- gan

- SKTECHSUMMIT

- C언어

- 코테준비

- Gaussian Splatting

- MYSQL

- lgaimers

- AIRUSH

- 파이썬

- AIRUSH2023

- 스테이블디퓨전

- CLOVAX

- Paper review

- gs논문

- SQL

- 파이썬코테

- 코랩에러

- 컴퓨터비전

- 2d-gs

- AI컨퍼런스

- 논문리뷰

- 3d-gs

- 코딩공부

- 프로그래머스

- 논문

- dreambooth

- 테크서밋

- 드림부스

- 논문읽기

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | |

| 7 | 8 | 9 | 10 | 11 | 12 | 13 |

| 14 | 15 | 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 | 26 | 27 |

| 28 | 29 | 30 | 31 |